The GPU Revolution: How Aethir is Reshaping AI and Gaming Infrastructure

A Deep Dive into the DePIN Network with $36M in Annual Recurring Revenue

Welcome to the alpha please newsletter.

gm friends, today we are talking about Aethir. Last week, Aethir announced they have $36M in ARR (annual recurring revenue), which would rank them in the top 20 crypto protocols by revenue this year.

Have I got your attention now? Good, this will be worth the read.

As artificial intelligence rapidly advances towards AGI, the demand for compute resources has skyrocketed, creating a widening gap between those who have access to powerful GPU chips and those who don't. Enter Aethir, a innovative decentralized physical infrastructure network (DePIN) that aims to democratize access to cloud computing resources.

Founded with the vision of making on-demand compute more accessible and affordable, Aethir has built a distributed network that aggregates enterprise-grade GPU chips from various sources. This network is designed to support the growing demands of AI, cloud gaming, and other compute-intensive applications.

In this interview, I sat down with the co-founder of Aethir, Mark Rydon, to discuss Aethir's unique approach.

Enjoy the alpha.

*This newsletter was done in collaboration with Tailored and I personally run several Aethir nodes.

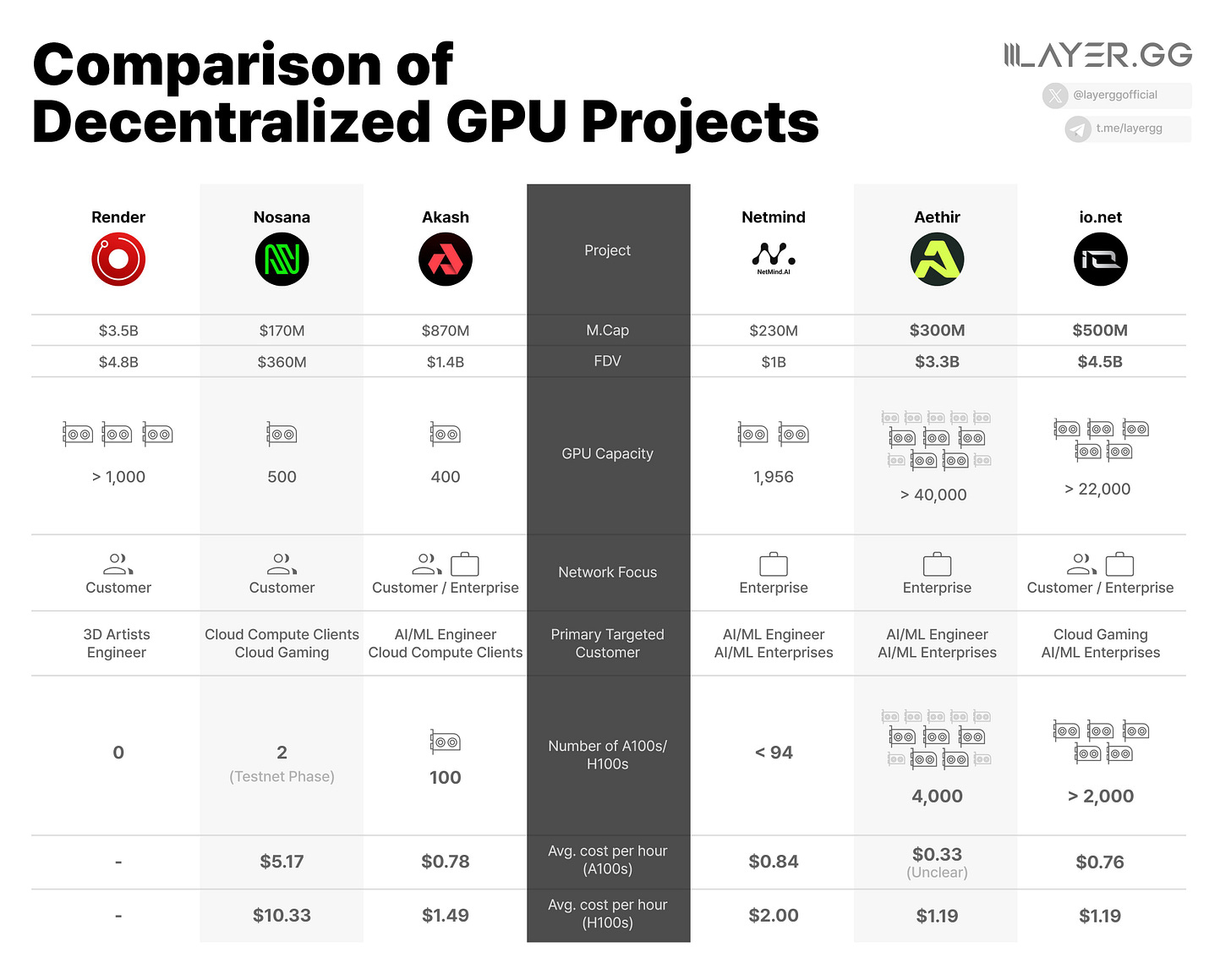

Given the recent surge in competition within the decentralised GPU sector, what sets Aethir apart from the other players in the space?

That's a really good question. I'll answer it in two parts. First, I'll explain the problem we address because that's key to understanding. There's this global compute scarcity, which I'm sure you and your audience are aware of. Tech giants are battling for access to critical GPU resources. It's a massive race to create increasingly intelligent AI until we reach AGI or ASI, and then everything changes in the world.

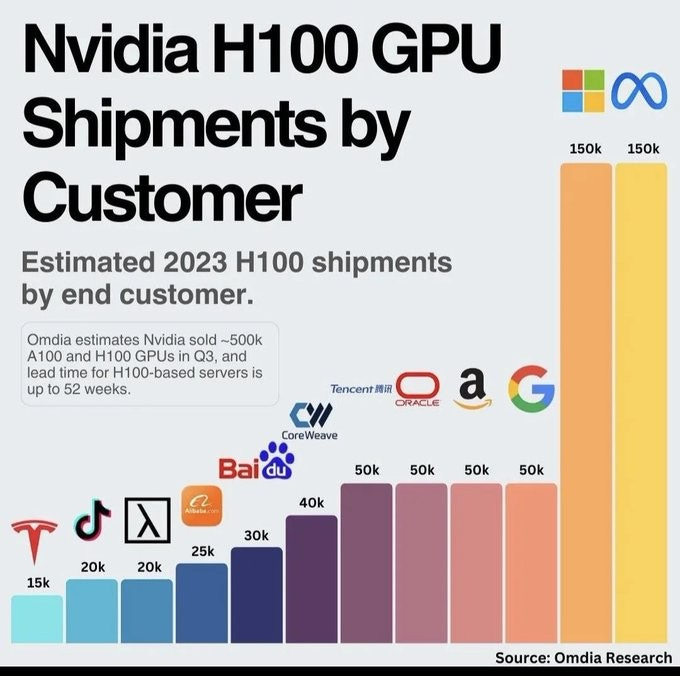

What's interesting about this race is that it's driven by a straightforward principle. If you add more GPUs and data to the ecosystem, the AI gets smarter. It's like a line from the bottom left to the top right, as AI intelligence increases. The type of GPUs needed for this is crucial. You can't do this on a consumer-grade GPU or a low-power graphics card. All the big companies in the AI race, whether on the training or application side, use enterprise-grade GPUs. For the last year and a half to two years, the specific model they've been hunting for is Nvidia's H100 GPU.

The key takeaway is that enterprise companies are the primary ones with a significant AI compute appetite. They're building their businesses on this compute infrastructure. So, we have to ask what kind of compute they want, what type of GPUs, and what quality, performance, and uptime requirements they need to meet their internal metrics. It's like Netflix needing servers with a high uptime guarantee to avoid service interruptions. The same goes for GPUs and any compute provider; they must meet stringent service, quality, uptime, and performance requirements.

Unfortunately, most compute networks in the Web3 space aggregate consumer GPUs. This is the easiest way to build a network—give tokens to a community where people contribute their idle gaming GPUs. This will quickly onboard a lot of GPUs and builds a strong community excited about token rewards. That's why many compute networks that exist today aggregate consumer GPUs.

The challenge is that to have a real business, you need to sell the aggregated compute. However, consumer GPU networks hit a low ceiling quickly because 99.9% of companies don't want to buy compute from a consumer decentralised network. They can't guarantee that a GPU won't be switched off at night or that bandwidth won't be limited by household activities like streaming Netflix. This creates a pretty extreme disconnect between enterprise requirements and what consumer GPU networks can deliver.

From day one, we decided not to aggregate any consumer GPUs. Every GPU connected to Aethir is enterprise-grade, integrated via enterprise network infrastructure, and located in data centres suitable for enterprise workloads. The biggest AI companies, telcos, and tech companies can do anything they need with our network without sacrificing performance or quality. In fact, they get higher performance and a better overall experience.

For example, IO.net had a lot of FUD to overcome about their massive consumer GPU network. When they wanted to prove their network could handle real business, they rented enterprise GPUs from Aethir. So, all the enterprise GPUs on IO.net are provided by Aethir. This is public knowledge within the ecosystem.

Aethir has been built from day one to serve enterprise customers, which is crucial.

One other thing: This has confused people in the AI space before when I've explained what we do. Decentralised GPUs, by definition, mean we don't own any of the GPUs. We have around 43,000 GPUs in data centres, and we don't own a single one. Out of those, we have just over 3,000 H100s, which is by far the largest collection of H100s within Web3, almost 10x our nearest competitor. This is why we have such big AI companies using our infrastructure because we can actually serve them.

The point of confusion for some AI companies is the importance of what we call “co-located machines”. If you're doing a large training run, like OpenAI or similar, and you need 500 or more H100s, those GPUs must be in the same data centre. You can't have one H100 in Japan, one in the US, and 200 in India. AI cannot be effectively trained on distributed hardware. This is a big technical challenge that other DePin companies have struggled with, and it's currently an unsolved problem. I think it's a huge opportunity, but it's incredibly complex.

Since Aethir focused on enterprise from the beginning, we understood that serving enterprise clients means more than just having a bunch of disconnected enterprise-grade GPUs. We also need to think about the co-location of machines in our network. So, Aethir has a significant number of large co-located clusters of high-performance GPUs. This means we're not just a distributed network with enterprise-grade GPUs worldwide; we have large co-located clusters contributing to our network, allowing us to handle those big AI jobs for companies that need co-located machines.

What was the inspiration for Aethir?

So actually, it was cloud gaming networks that got us excited about distributed GPU compute. The team met while I was living in Beijing for about seven years. I moved there to found my first company and eventually ended up working on scaling cloud gaming networks.

Long story short, we had this idea that we could address the performance and scalability challenges of cloud gaming networks by distributing the hardware in a decentralised manner. At a high level, the premise was that latency is the killer of these networks. The further a user is from the compute, the poorer the user experience because of increased latency. The idea was, if you remove the incentive to centralise compute, you can have a more distributed network. As the network gets bigger and more distributed, the likelihood of a user being closer to the compute increases, reducing latency and improving performance.

Centralised solutions focus on putting all resources in one location for economies of scale, but this doesn't add value from a user perspective. It actually limits network performance. If you were building a network optimised for user experience, you’d distribute the compute all over the place so users are always close to it. We thought, if we could solve the distribution and unit economics challenges, we could address the issues that stopped services like Google Stadia from deploying where they were needed.

That’s where we started, and we quickly realised our relevance to the AI sector and began building products there as well.

Another bullish thing to look at is the global gaming population which is around 3.3 billion gamers. The majority, about 2.8 billion, game on low-end devices, which means mainstream AAA titles are inaccessible to them, and likewise these gamers are inaccessible to the AAA developers.

The most feasible solution for unlocking all of this capital is to use cloud gaming to abstract away the hardware requirement for users. Simply take technology that we already have and make it more cost-efficient to scale. Now you have a technology that unlocks these 2 billion gamers, no matter where they are. And that's exactly what building the network in a decentralised way does.

We're bringing a hardware-decoupled gaming experience to billions of gamers all over the world in a way that fundamentally wouldn’t be possible in any other way. That’s why I’m so bullish on the gaming side; it was our original vision.

Do you see the demand for decentralised computing significantly increasing? Or do you think we are already at that point, but it will take more time for customers to adopt this technology?

I think, to be honest, if we're talking about decentralised cloud solutions, or just about Aethir, it’s mostly about education. You don’t need to know that Aethir is a decentralised cloud provider to work with us. We work comfortably with Web2 companies—90% of our customers are Web2 companies, and they’re very happy with the services we provide.

Looking at the broader AI ecosystem, what's the inflection point? There are some crazy statistics out there.

I read a research paper a couple of weeks ago that said, based on the forecasted growth in demand for compute, by 2030 there won’t be enough power on planet earth to meet the compute needs of AI. It’s totally insane.

You've got these big macro numbers showing massive capital being deployed into the ecosystem. The numbers are almost too big to comprehend. But if you zoom in a bit, you’ve got two types of compute requirements: training and inferencing. Training is where you make the AI more intelligent, like going from ChatGPT-4 to ChatGPT-5. Inferencing is when the AI does its job, like answering questions.

People like you or I are mostly using ChatGPT or one of the other big models, right? Like ChatGPT through the Microsoft ecosystem or Gemini through Google. Most of our interactions are with generalised large language models from a very small number of companies. But if we look a year ahead, given the exponential growth in the sector, my guess is you'll be interacting with AI in far more places than you currently are.

Soon we’ll be interacting with AI in more meaningful, agentic ways. AI will be doing more things for us, like booking tickets, providing assistance services, and handling customer service calls. It’s going to be so much more than it is today.

If you follow the compute upstream, unless a company is just using the ChatGPT API to create an application, they’re likely building their own AI product, which means they have their own compute infrastructure needs. So, as the inferencing space grows, it's going to become more fragmented. Right now, most infrastructure for training comes from a small number of big players. While there are companies developing new competitors to these large language models, the explosion of AI applications on the inferencing side will lead to a more fragmented compute market.

This means we'll see a huge amount of demand coming from channels with competitive pricing and friendly contracts for startups and smaller businesses. That’s probably the most imminent inflection point I see coming.

Another product in the Aethir ecosystem is the A-phone. Could you tell us more about this and who the target audience is?

The A-Phone is building and scaling directly on our infrastructure. It uses our cloud gaming technology to stream real-time rendering in a low-latency manner to the device. It's super cool because it’s all about access. For example, you can have a $150 smartphone, download the Aethir application, and open it to access the equivalent of a $1,500 device. All the hardware constraints of your local device disappear because you have the power of the cloud enabling that application.

You can have almost unlimited applications open on your Aethir phone, and it won’t drain your battery. All the compute, processing, and storage are done in the cloud, basically giving you a super phone that you can call on whenever you want to run any application you need.

Whether it’s a game or an education platform with video conferencing, it’s just super cool. It removes the barrier that hardware puts on people’s access to content, tools, or utilities, especially for mobile users, who make up the vast majority of internet users.

What do you think has been the key to Aethir's success so far: your tech solution, or your business development efforts?

I think there are two elements we've focused on extensively as a company. First is the enterprise element I mentioned before. That meant making some very hard decisions early on. As I said, it's much easier to aggregate consumer GPUs. It's much harder to aggregate these enterprise-grade GPUs that we already knew were difficult to find and access. We took a more difficult road early on, which put us at risk in the earlier days of our operation. But because of that, we did the hard work and are now better off. Not many companies have the conviction to do something that risky early on, and that has made a big difference for us.

Secondly, we've always been incredibly focused on real business—real utilization, real contracts, real revenue. This focus has been massive for us from the beginning. That's why we chose the enterprise pathway. We wanted to take the best of Web3 technology and deliver industry-changing solutions, not just Web3 solutions, but top-of-the-line industry solutions within the AI and gaming sectors.

Our business development team has been crucial in convincing partners to join our ecosystem, especially in the early days. On the tech side, we've made the process of connecting compute resources seamless. Currently, we have more supply looking to enter our ecosystem than we can accept. In the future, we aim to be a truly permissionless, fully decentralised ecosystem, and we'll get there. But at the start, we had to be pragmatic. Opening the floodgates for compute and having a massive number of GPUs drain your token is not a great business move.

We see ourselves as a supply-led organisation. We always try to have more supply than demand. We don’t want to turn away demand, but we also don’t want a massive gap between supply and demand. We want to grow the relationship between supply and demand intelligently and steadily. We're not just onboarding unlimited GPUs to boast about our numbers; that's not the right play.

We are planning some big announcements in the next couple of weeks that will show our commitment to transparency. This will be really interesting for people and will show that Aethir is a company people want to engage with.

Could you tell us about the Aethir token? How does it fit into the ecosystem and how will it accrue value?

That's actually the subject of a bigger release that you will all get to see soon. I can't speak any more on that topic right now, but what I can say is that a lot of projects have previously had difficulty engaging with big Web2 entities due to the requirement of dealing with tokens.

It's been a constant challenge in the space, and we have a very exciting and novel solution for it. I think people are going to be really bullish when they see it. It will allow us to drive a lot of volume to the token as a result.

Our biggest customers are Web2 customers, and I don't see that changing. We need to make sure we're taking that business and allowing that value to accrue to the Aethir token and the ecosystem it supports. That's our commitment, and I think you'll see some really interesting stuff over the next week about how that's going to happen.

Aethir resources:

And that’s your alpha.

Not financial or tax advice. This newsletter is strictly educational and is not investment advice or a solicitation to buy or sell any assets or to make any financial decisions. Crypto currencies are very risky assets and you can lose all of your money. Do your own research

This piece just 10x my bullishness on Aethir.