Crypto x AI - Going Down the Rabbit Hole

Making sense of Crypto x AI & 17 projects to watch

Welcome to the alpha please newsletter.

“When the great innovation appears, it will almost certainly be in a muddled, incomplete and confusing form. To the discoverer himself it will be only half understood; to everybody else, it will be a mystery. For any speculation which at first does not look crazy, there is no hope.” – Freeman Dyson

In this piece I will explore ongoing and potential integrations of crypto and AI and I present 17 different projects that you might consider adding to your watchlist.

Get ready, I’m dropping a lot of alpha (this took a really long time lol).

But before going down the rabbit hole, let me say one thing: we are only just scratching the surface of Crypto x AI. This field is nascent, complex and really speculative at the moment.

I am but a humble crypto researcher that is trying to get up to speed with a new emerging vertical, so please exercise caution when approaching any investing in this area. We are in the very early speculative phase, and it’s likely that prices this cycle will get far ahead of the technology and fundamentals.

With that being said, let’s dig in.

What’s to come?

A general overview of AI

The AI Stack

Why crypto and AI is a good fit?

Different emerging crypto x AI verticals

Crypto x AI watchlist (17 projects)

A General overview of AI

Artificial Intelligence (AI) is a dense subject and it will take years of study to truly comprehend every aspect of what it is. But for the sake of this post, I will argue that AI refers to the field that tries to mimic or simulate cognitive human intelligence to perform a wide range of tasks from learning, reasoning, solving problems, or understanding natural language.

While AI was a niche area of R&D for many years, its real breakthrough came with the arrival of ChatGPT. We all remember how mind-blown we were when we first interacted with this generative AI bot. Looking back, it is fair to agree that it was an “iPhone” like moment.

It had the fastest adoption among consumer products in history, scaling to 100 millions users in 2 months. In contrast, it took Facebook 1500 days to reach the same user count.

We are now witnessing exponential growth in this field. When considering ARK’s estimation that training model performance could increase by a factor of 5x in 2024 alone, it is obvious that AI will continue to unlock a wide range of use cases.

In the upcoming years, it would not be surprising to witness the emergence of several multi-billion-dollar companies in both applications that leverage AI or infrastructure that makes the AI revolution possible. In fact, funding in this area has recently boomed.

Speaking of that, let’s look a bit further on what actually makes this thing called AI possible.

The AI stack

Like me, I am sure that when you think about AI, the first thing that comes to your mind is ChatGPT and generative AI prompt. But this is only the tip of the iceberg, and in fact, the “AI” field is much more complex. To better understand it, here’s a quick overview of the different layers of technologies and component that make up the AI stack:

Compute hardware

AI is not only about the code. AI is resource-intensive and the specific physical infrastructure such as neural processing units (NPUs), graphics processing units (GPUs), and tensile processing units (TPUs) are essential. Ultimately, this is what provides the physical means to perform the calculations and execute the algorithms that make AI systems work. Without them, there would be no AI.

Industry leaders in that field are Nvidia (which needs no introduction by now), Intel, and AMD. They compete to develop the most efficient hardware, both in terms of model training and inference workloads.

Nvidia has been one of the most straightforward way to get exposure to this revolution so far (as shown by Nvidia’s recent price action).

Cloud platforms

AI developers rely on hardware to run their models. Typically, they have two ways to get this hardware capacity: They can either run GPUs locally; or rely on cloud providers. The first solution often proves to be too expensive and not economically worth it, and over time cloud providers proved to be an interesting alternative.

These are big corporations with a lot of resources that acquire and operate these powerful hardware and allow developers to use these resources on a pay-as-you-go or subscription basis. This eliminates the need for developers to invest in and maintain their own physical infrastructure.

Industry leaders in that field are AWS, Google Cloud, or Nvidia DGX cloud. Their objective is to give developers of all sizes fast access to multi-node supercomputing for training the most complex LLMs.

Models

On top of that comes the most complex and hyped part of AI: ML (Machine Learning) models. Those are computational systems designed to perform tasks without explicit programming instructions and represent the brain of an AI system.

The ML pipeline is divided into three steps: data, training and inference and there are three main types of learning: supervised learning, unsupervised learning and reinforcement learning.

• Supervised learning refers to learning from examples (provided by a teacher). A model can be shown pictures of dogs and told by the teacher that these are dogs. Then the model learns to distinguish dogs from other animals.

• Many popular models, such as LLMs (e.g. GPT-4 and LLaMa) are trained using unsupervised learning. In this mode of learning, no guidance or examples are provided by a teacher. Rather, the model learns to find patterns in the data.

• Reinforcement learning (learning from trial and error), is mainly used in sequential decision-making tasks such as robotic control and playing games (e.g. chess or Go).

Lastly, those models can either be open source (which can be found on model hubs like Hugging Face) or closed source (models like OpenAI where exposition is done via APIs).

Application: This is the last layer of the AI stack and it is the one we usually face as users. They can either be B2B or B2C and leverage AI models to build an application on top of it. A popular example is Replika which is an app that allows you to design a virtual partner and chat about everything, 24/7. When examining various reviews, it appears that it has had a tangible impact on the lives of many.

Overall, it appears that those different technological layers are still in their early stages of development and that we are only in the beginning of what some like to refer to as a Cambrian explosion. With that in mind, we will see that crypto has a role to play in this technological boom.

Why crypto and AI is a good fit

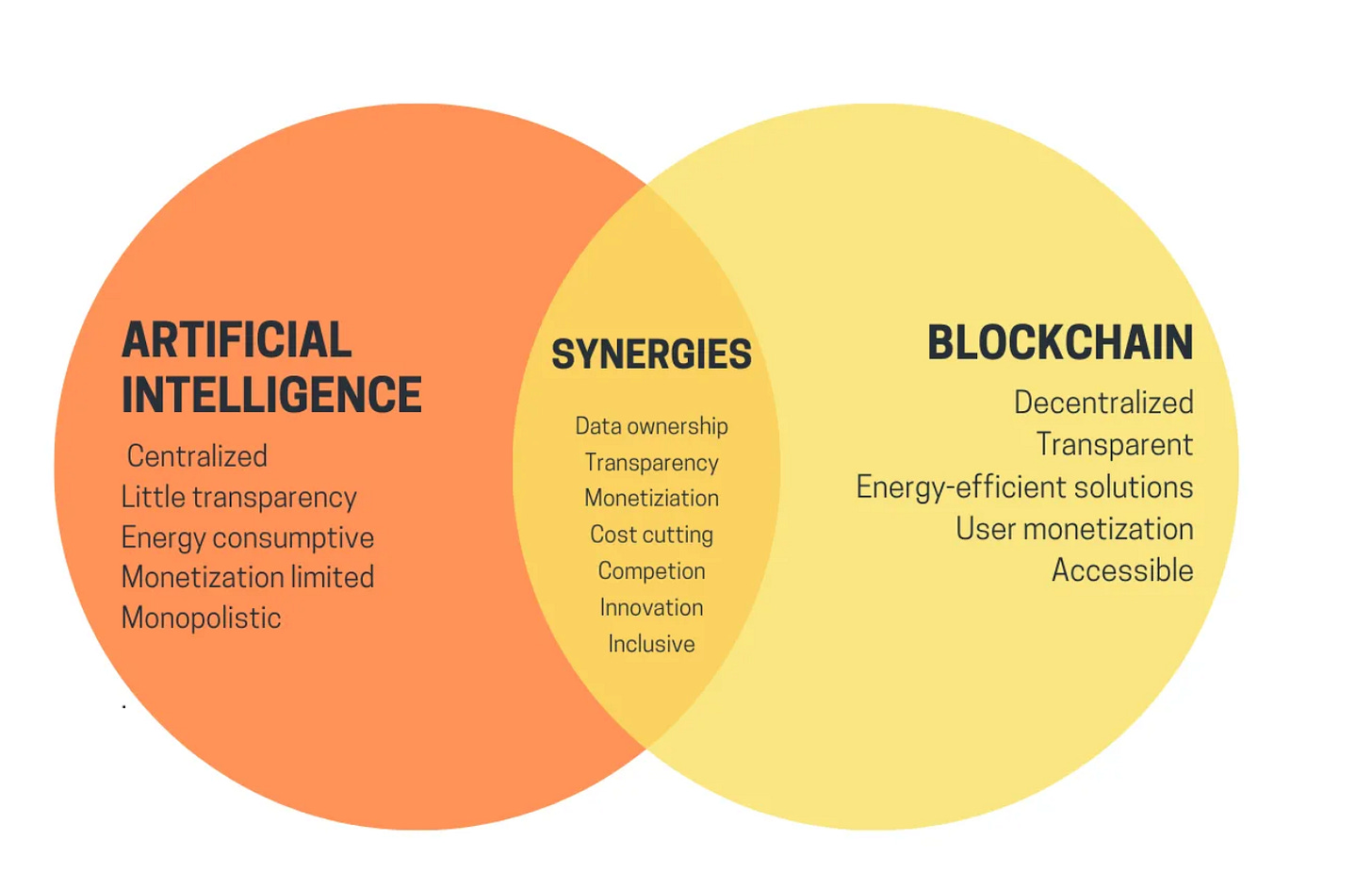

While crypto is not necessarily essential in every layer of the AI stack, there are a lot of reasons to believe that decentralized AI is as important as decentralized money, that smart contracts can leverage machine learning to provide powerful user experiences, or that crypto can bring more security, transparency and unlock new uses cases for AI.

AI is dominating the crypto landscape

And the market is already showing a lot of enthusiasm on the potential application of crypto and AI and the trend is showing it’s the hottest narrative. Since the beginning of 2024, AI has performed very well relative to other sectors within crypto.

With all the further developments set to take place in this field, there’s a lot of reasons to believe that we are still in the very early days and the bubble is likely just forming.

Speaking of that, let’s look at what are the different developments taking place between crypto and AI.

Different Crypto x AI emerging verticals

Here are a couple of the main synergies between crypto and AI:

From centralized cloud providers to DePIN:

As we’ve discussed, the foundational layers of AI are hardware and cloud providers. While crypto cannot compete in producing better hardware (and there’s no reason for that), it is fair to assume that it has a role to play in providing access to those multi-node supercomputing in a more efficient, more secure, and decentralized way. This is part of a subfield of crypto called DePIN, or Decentralized Physical Infrastructure. These represent blockchain protocols that incentivise decentralized communities to build and maintain physical hardware.

Here, the main use case of DePIN for AI would be in:

Cloud storage

Computing power

The idea here is simple: AI developers need more GPUs and data storage capacity and there’s a lot of reason to believe that crypto DePIN projects can help drive new computing and storage supply to the market by activating latent resources with token-based incentives.

Here’s a great overview of the entire DePIN sector if you are fancy diving deeper.

Enabling transparency, user governance, and data ownership:

AI is going to be bigger than the internet. This means that understanding what models are used, how they work, and which data is fed into them is becoming crucial for a free and democratic society to work well. With that in mind, it feels that the never ending debate over the black box and mega power of Web2.0 giants can be ended by giving ownership to users through AI Tokenization (from Infrastructure, all the way to model and app).

Knowing the provenance of the AI models someone is using can be very important in some circumstances. As with everything, models have biases and depending on how they are made and on what data they are trained, outputs can be totally different. There is a strong case to be made that AI models and training should be decentralized and on-chain to allow for more transparency.

We don't need the Senate or opaque entities to decide on the direction of the world (through AI models), control our data without consent, nor endless terms and conditions that, let's be honest, we will never be in a position to read and answer. In fact, we want the opposite of that where transparency and user governance is at the forefront of everything and where we have control over our data.

By utilising crypto infrastructure, we can avoid replicating the same mistake as we did with the internet apps. Instead, we could have collective ownership, decentralized governance, and transparency at all levels. This is the path forward.

Aligning incentives & AI monetization:

High quality training data is one of the primary contributors to model performance. However, as ARK mentioned, premium sources for high-quality training data might be depleted by 2024, potentially leading to a plateau in model performance.

Here, crypto could incentivize individuals to monetize both private and public datasets as well as AI models, agents and other parts of the AI stack. With the possibility to create multi-sided, global, permissionless markets, anyone can be compensated for contributing. There also comes the possibility to incentivize people to maintain the quality of data used to train foundational AI models and or to provide different models to a particular network.

Crypto is driving a financialization boom. The AI stack needs its own payment mechanism. Sounds like a good fit right?

On-chain AI/ML (ZKML & opML):

Zero knowledge cryptography is one of the most popular technology in web3 as it brings to the table the ability to create proofs of “integrity” for a given set of computation, where the proof is significantly easier to verify than it is to perform the computation.

When we talk about ZKML, we are talking about the possibility to bring ZK proof to the “inference” and “data” part of the machine learning model (and not the training part which is in itself too computational intensive). As research and technology in this field evolve, we may see more efficient and scalable solutions that could potentially make ZKPs more applicable to the training phase of machine learning models

With ZKML, computations are being hidden from the verifier but the prover can prove the computational correctness of the ML without revealing further information.

OPML (Optimistic Machine Learning) is another approach which enables AI model inference and training/fine-tuning on the blockchain system using optimistic approach. LlaMA2 and Stable Diffusion models can be made available onchain now through optimistic mechanisms (similar to Optimism and Arbitrum).

The latest solution from a particular project mentioned later combines zkML and opML, enableing Ethereum to run any model with privacy features.

This can lead to a new era of ML models where they will be on-chain and transparent where it would be easy to verify that a given output is the product of a given model and input pair. In a world of opaque model and opaque datasets, this can represent a game changer and bring back power to the users (which follows the preceding ideas on enabling transparency and user governance).

Authentication & privacy:

As AI applications grow, we are approaching a tipping point where no one will know what is real or simulated online. Looking at this image generated by Sora, the recent text-to-video platform from OpenAI, do you think you could tell? And think about how this is going to get exponentially more convincing in the years to come.

Given this reality, there’s a strong case to be made for decentralized identity stored on blockchains. This could protect people from involuntarily engaging with AI bots and distinguish real from deep fake information. In a world where a bank run happens through a few clicks (as we all experienced with SVB), it becomes crucial to provide proof of authenticity and crypto seems to be the best way to do it.

Here’s a simple example of how it might work: The official author of something could digitally sign a “hash” on a blockchain saying “I did this myself.” Another party (say a media company) could attest by signing a transaction that say “I attest this.” Users could identify themselves in the signatures by cryptographically proving control of let’s say the domain names (for instance, nytimes.com).

In that way, information can be provable, transparent, immutable and composable. And this is becoming a key factor for the post-AI world we are starting to live in.

Crypto x AI watchlist

I am sure that by now, you might agree that there’s a lot of reason to believe that during the next phase of this bull run, a good AI watchlist could be one your best assets.

Fortunately for you, we are going to look just at that. But before, let just remind ourselves that right now, speculation is everywhere and it is essential to exercise caution. The truth is, real tangible projects are rare nowadays. So what follows aren’t predictions, they are just ideas. And ideas do change a lot as data becomes more available and time separates the noise.

This isn’t an exhaustive list, just projects I have managed to dive into that I think are worthy of paying attention to. There is a lot going on in this category, I have obviously missed a ton of killer teams.

Having said that, let’s look at 17 projects that you might want to keep an eye on:

1. Render Network

TLDR: Render is the pioneer decentralized GPU platform. In short, this project aims to unlock the full productive potential of decentralized GPUs to power two different kinds of projects: 3D content creation and AI.

Bull case: GPUs are already in short supply and if AI continues on its current trend, the shortages will only grow more acute and this represents an opportunity for the Render Network + it is one of the largest tokens which is likely to benefit from the AI narrative this cycle. Render also has multiple AI compute clients.

How to get exposure: RNDR

2. Akash Protocol

TL;DR: Akash is a decentralized computer marketplace that launched on mainnet in September 2020 as a Cosmos app chain. While the first iteration of Akash was focused on CPU (Central Processing Unit), it has recently transitioned to GPU compute, taking advantage of this paradigm shift in computing infrastructure thanks to the AI boom (similar to Render).

Bull case: The current vision of the project in four words: “AirBnB for GPU compute.”

Here’s an interesting thesis on Akash from Modular Capital.

How to get exposure: AKT

3. Ora

TL;DR: ORA is the verifiable oracle protocol that brings AI and complex compute on-chain. Their solution opp/ai combines advantages of zkML and opML, representing a leap forward from two approaches.

Bull case: Their innovation marks a turning point of onchain AI development, unifying zkML and opML landscapes.

How to get exposure: Join their discord to get more updates and become an early contributor.

4. io.net

TL;DR: This is another interesting DePIN project built on Solana that gives access to distributed Cloud clusters of GPUs at a small fraction of the cost of comparable centralized services.

Bull case: Decentralized AWS for ML training on GPUs. Instant, permissionless access to a global network of GPUs and CPUs. Revolutionary tech that allows for the cloud clustering of GPUs together. Can save large scale AI start ups 90% on their compute costs. Integrated Render and Filecoin.

Good write up here by Sterling Crispin.

How to get exposure: Join the io.net discord, they will be running a community programme which will result in an IO airdrop.

5. Bittensor

TL;DR: Bittensor is a decentralized, open source project that aims to create a neural network protocol on blockchain, allowing for the creation of AI dApps and enabling the exchange of value between AI models in a peer-to-peer way.

Bull case: This is an ambitious project that has gained a lot of attention recently and become the largest AI coin by market cap. TAO is likely to be one of the largest beneficiaries of speculation on AI this cycle.

Many threads here from DreadBong0 if you want to truly understand the tech, goals and vision of Bittensor.

How to get exposure: TAO & you can stake your TAO with validators to earn TAO emissions. You can also get involved more deeply if you want to contribute to the network by joining the discord.

6. Grass

TL;DR: Grass is the underlying infrastructure that powers AI models. By installing the Grass web extension, the application will automatically sell your unused internet to AI companies, who use it to scrape the internet and train their models. The result? You share in the growth of AI, earning a stake in the network for selling a resource you didn’t even know you had.

Bull case: Grass is creating a new revenue stream for every single person with an internet connection. Grass is aiming to become the data provisioning layer for decentralized AI. I have already talked about this project in length and interviewed the team (I encourage you to look at it).

How to get exposure: Run the chrome extension in the background, takes 2 mins to set up start earning Grass points, which will result in the GRASS token later this year. Here is an invite to the closed beta.

7. Gensyn

TL;DR: The Gensyn Protocol is a layer-1 trustless protocol for deep learning computation that directly and immediately rewards supply-side participants for pledging their compute time to the network and performing ML tasks.

Bull case: This project has very, very strong backers, and will clearly be a major AI crypto infrastructure project if they can execute. Here is a link to the litepaper.

How to get exposure: Follow them on Twitter.

8. Allora

TL;DR: Allora is a self-improving decentralized AI network. Allora enables applications to leverage smarter, more secure AI through a self-improving network of ML models. By combining research at the frontier of crowdsourcing mechanisms (peer prediction), federated learning, and zkML, Allora unlocks a vast new design space of applications at the intersection of crypto and AI.

Bull case: Allora has been developed by Upshot – a market-leader in developing AI x crypto infrastructure over the past 2.5 years. They are focused on more financial use cases: AI powered price feeds, AI powered DeFi vaults, AI risk modelling etc, which could mean they find PMF earlier than most.

Here is their recently released litepaper.

How to get exposure: Join the discord to keep an eye on how you can participate as an early community member.

9. Botto

TL;DR: Botto is a fully autonomous artist with a closed loop process and outputs that are unaltered by human hands. The only input humans have is voting on Botto outputs to guide what the artist does next.

Bull case: This unique project combines AI, Art, NFTs and DeFi, and is already generating real revenue ($4.5M since inception). Botto’s art has been sold in the Christie’s. It’s the first ever AI artist you can invest in. Art sales revenue is distributed to stakers.

How to get exposure: BOTTO or buying Botto’s NFTs on Super Rare.

10. Parallel (Colony)

TL;DR: Colony is a never ending game that is powered by AI where all in-simulation items are on-chain. You’re paired with a Parallel Avatar who acts as boots on the ground. You and your Avatar will work together and share on-chain resources to navigate an ever expanding Parallel world that’s powered by PRIME.

Bull case: PRIME is one of the only tokens that truly crosses over with gaming and AI. Colony could be a new genre defining game and has real viral potential should the team execute. The studio producing this are probably the best in the web3 gaming space.

How to get exposure: PRIME and parallel avatar NFTs. Sign up to play the game when it launches.

11. Aethir

TL;DR: Aethir introduces a novel approach to cloud computing infrastructure, focusing on the ownership, distribution, and usage of enterprise-level GPUs. It functions as a marketplace and aggregator, facilitating the connection between supply-side participants—such as node operators and GPU providers—and users and organizations from computing-intensive sectors like AI, virtualized compute, cloud gaming, and cryptocurrency mining.

Bull case: Aethir looks to be another strong DePin competitor within the GPU compute cloud category. They claim to have 20x more GPUs than Render. They will be launching in a very favourable environment within a hot sector.

How to get exposure: Upcoming node sale and join their discord.

12. Morpheus

TL;DR: Morpheus is building the first truly decentralized peer-to-peer network of personal smart agents to democratize AI for the general public.

Bull case: One cool fact about this project is that one of its contributors is Erik Voorhees, a true OG in this space. The project gives me Bittensor vibes. Write up here.

How to get exposure: You can commit stETH to earn MOR tokens during the fair launch

13. Autonolas

TL;DR: Autonolas is an open marketplace for the creation and usage of decentralized AI agents. But more than just that, it also provides a set of tools for developers to build AI agents that are hosted off chain and can plug into multiple blockchains including Polygon, Ethereum, Gnosis Chain, or Solana.

Bull case: Autonolas is one of the few AI projects where there is evidence of some adoption today. OLAS is one of the few tokens live right now that people can bid within the AI crypto category. Good write up below.

How to get exposure: OLAS

14. MyShell

TL;DR: MyShell is a decentralized and comprehensive platform for discovering, creating, and staking AI-native apps.

Bull case: MyShell is a kind of AI app store, but a platform that allows you to create AI bots and application as well. It allows anyone to become an AI entrepreneur and monetize their application. This product is live in production right now.

Some interesting content by the team.

How to get exposure: While they don’t have a token yet, you can sign up on their application and start interacting with bots to earn points (who knows what this could bring you).

15. OriginTrail

TL;DR: OriginTrail integrates blockchain and AI to offer a Decentralized Knowledge Graph (DKG) that ensures the integrity and provenance of data, enhancing AI capabilities by providing access to a verified information network. This amalgamation aims to improve the efficiency and reliability of AI agents across various industries by establishing a secure, trustworthy foundation for data creation, verification, and querying.

Bull case: Working product. Enterprise clients. My understanding is that knowledge graphs allows AI to interpret data and make sense of it in context to everything else going on. TRAC also seems to have a cult following.

How to get exposure: TRAC

16. Ritual

TL;DR: Ritual is an open, sovereign execution layer for AI. Ritual will allow developers to seamlessly integrate AI into their apps or protocols on any chain, enabling them to fine-tune, monetize, and perform inference on models with cryptographic schemes.

With Ritual, the vision is for developers to be able to build fully transparent DeFi, self-improving blockchains, autonomous agents, generated content, and more.

Bull case: Ritual really has top tier backers. Developers can try the Infernet SDK right now. I found one developer that launched an experimental nft project using the SDK a few days ago. Pretty cool (I was too late to mint).

How to get exposure: Join their discord and keep paying attention.

17. Nillion

TL;DR: Nillion enables the training and inference of AI models in a secure and confidential manner, creating the backbone of secure personalized AI/

Bull case: Nillion’s blind computation network unlocks many new use cases, with personalised AI being an enormous unlocked area. Personalized AI won’t be widely adopted unless private data processing exists. Nillion’s solution really sounds game changing.

How to get exposure: Join their discord and keep your eyes open. If you are developer I believe they will be running some hackathons soon.

And that’s your alpha.

This research was powered by SwissBorg

One of the tokens mentioned in this piece is now live on SwissBorg. I think the SwissBorg app is probably the best mobile UX in the space. They also curate and offer alpha deals (early investing opportunities) to the community on the app.

Not financial or tax advice. This newsletter is strictly educational and is not investment advice or a solicitation to buy or sell any assets or to make any financial decisions. Crypto currencies are very risky assets and you can lose all of your money. Do your own research.

Bitcoin New Highs Explained: It's a Trap!

https://open.substack.com/pub/blackboxpolitics/p/bitcoin-new-highs-explained?r=99p96&utm_campaign=post&utm_medium=web&showWelcomeOnShare=true

So informative. good work